Compared with the positioning schemes such as Lighthouse Laser, Big VR and Oculus Constellation, who is the strongest VR positioning?

The positioning of space is the key to VR technology. At present, Oculus' ConstellaTIon system infrared camera positioning, HTC/Vive, and Da Peng VR beacon laser positioning system are better on the market, although both positioning methods are adopted. PnP solves, but each has its own limitations and adaptation scenarios, which will be analyzed in detail one by one. Oculus Constella TIon positioning system, Oculus uses a Camera-based positioning solution, known as ConstellaTIon. The Oculus helmet and handle are covered with an infrared sensor that flashes in a fixed pattern. Nowadays, many manufacturers have adopted different spatial positioning schemes. Which solution is the best, below, Xiaobian will introduce you for you!

A set of pictures is taken by a special camera at a fixed frequency (Oculus CV1 is 60fps). The system reverses the position of the point in three-dimensional space by the two-dimensional position of these points on the picture, as well as the known three-dimensional model of the helmet or handle. This process can be further subdivided into the following steps.

First, to ensure accurate positioning of the LEDs on the helmet and handle, the Oculus Camera software/driver on the PC side sends a command through the HID interface to illuminate the LEDs, which is sufficient to be captured by the Camera, and these LEDs are some special The mode flashes. This ensures that even with occlusion, the entire tracking system will work properly and will not be affected by other noise signals in the environment, as long as there are enough LEDs to be captured at different angles.

Then record the position and orientation of each captured LED.

Of course, in order to do a good job, the Oculus camera itself does not need the color of the LED, just record the brightness of each point, so the image recorded in the Oculus Camera firmware is 752 & TImes; 480 pixels, the pixel format is Y8 gray scale.

Get the position of the LED on the helmet on the 2D image, and the 3D model of the Sensor on the helmet. Is there any way to estimate the 3D position of these points? This is essentially a Pnp solver problem (https://en.wikipedia.org/wiki/Perspective-n-Point): a model with n 3D points (like the LED points mentioned above on the helmet) 3D distribution), the set of n 2D points captured by the camera, plus the internal parameters of the camera itself, can calculate the position coordinates (X, Y, Z) and posture of these points (Yaw, Pitch, Roll) .

How big is the collection of this point? This is a problem that seeks the optimal solution. The common practice is n <<=3, that is, as long as more than three LEDs are captured on the picture, the relevant posture and position can be solved. Considering that the occlusion or the photograph taken is not clear enough, in practice, at least 4-5 points must be photographed, and the posture and position of the entire helmet can be correctly calculated, which is why the Oculus helmet is covered with a lot. An important reason for LED points.

How to solve the error in the calculated data? A common approach is to get the 6DOF data, then use the data to make another projection, generate a new 2D image, compare the image with the image we started to calculate, and get an error function. Apply this function to calibrate. . But this brings up another question: How do you know the matching between the points on the 3D model and the points on the captured 2D image when comparing? If one-to-one correspondence is calculated, the amount of calculation is too large. So Oculus used a different blinking pattern to quickly match points on the 3d model and points on the captured image.

Further, on the problem of pose estimation, the pose obtained by the optical (camera picture) has an error mainly due to the difficulty in point recognition on the photograph captured when the object moves rapidly. In order to reduce this error, it is necessary to perform one-step calibration of the attitude obtained by PnP through the IMU information, which is called sensor data fusion.

As can be seen from the above description, based on the optical positioning technology of the camera, the installation configuration is relatively simple and the cost is relatively low, but the image processing technology is relatively complicated, and the position of the object is relatively difficult when the object moves faster, and is susceptible to Natural light interference.

In addition, Camera-based positioning accuracy is limited by Camera's own resolution. For example, the Oculus Rift's Camera is 720p, which is difficult to provide sub-millimeter precision positioning.

Finally, the camera itself can capture the distance of the photo is relatively close, can not be applied to a large room location, generally can only provide desktop-level VR positioning. Of course, Oculus recently offered three Camera solutions, trying to compete with the room scale and the lighthouse positioning technology.

Lighthouse laser positioning technology

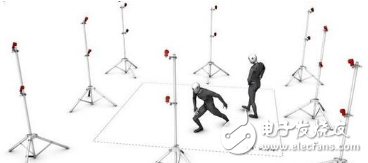

On the contrary, the light source laser positioning technology provided by htc, big friends and other enterprises avoids the high complexity of Camera positioning technology, has the advantages of high positioning accuracy, fast response speed, and distributed processing, and can allow users to carry out in a certain space. Activity, the user is limited in size, can adapt to the game that needs to move, and truly achieve the Room Scale vr positioning.

The following explains from the principle of lighthouse positioning why lighthouse laser positioning is a better choice in large space applications.

HTC's beacon positioning system has two base stations, each with two motors on it, one of which fires horizontally and the other sweeps vertically.

The base station refresh frequency is 60 Hz, the motor 1 above the base station a first sweeps in the horizontal direction, after 8.33 ms, the second motor sweeps in the vertical direction (the second 8.33 ms), then the base station a is turned off, and then the base station b repeats. Works the same as base station a. . . . . .

In this way, as long as there are enough sensor points in the vertical and horizontal directions in 16ms, the angles of these sensor points relative to the base plane of the base station can be calculated, and the irradiated sensor points are on the projection plane. The coordinates can also be obtained.

At the same time, the coordinates of these points in space at rest are existing and can be used as a reference, so that the rotation and translation of the currently illuminated points relative to the reference point can be calculated, and the coordinates of these points are further obtained. In fact, it is also a PnP problem.

Further, by recombining the posture obtained on the IMU, the posture and position of the helmet or the handle can be given more accurately.

The position and attitude information of the helmet/handle calculated in the previous step is transmitted by RF to a receiving device connected to the PC. The device then uploads the data to the driver or OpenVR runtime of the PC through the USB interface, and finally uploads the game to the game. Engine and game apps.

Big VR Locating Solution

Compared with HTC's positioning technology, Dapeng made further innovations in laser positioning. Three motors were added to each base station, numbered from top to bottom, motor No.1 was scanned vertically, and motor No. 2 was horizontal. Directional scanning, motor No. 3 is scanned from the vertical direction.

The interval between each motor scan is 4ms. When the motor is working, other motors are off. The basic timing is as follows:

The motor 1 on the base station first sends a synchronous light signal to the sensor point on the helmet/handle, the sensor point clears the counter, and the motor 1 then sweeps in the vertical direction; after 4 milliseconds, the second motor sweeps in the horizontal direction (second 4 ms), then the third motor is swept in the vertical direction (3rd 4 ms).

The irradiated sensor point can relatively easily obtain the time when the irradiation is performed, and the time at which each motor starts scanning. The rotation speed of the motor is fixed, so that the angle of the sensor point relative to the scanning plane can be obtained, and the angle is encoded and transmitted to the PC end through RF, and after further posture fusion, the PC end can easily get 6DOF. data.

Increase the number of scans at a given time, greatly reducing the number of sensors required. From the actual test, in the latest E3P of Da Peng, the number of sensors needed last is about a quarter of Lighthouse (Lighthouse uses dozens of sensors), and the sensors required for helmets and handles are all ones. The number of helmet handles has become lighter. This not only greatly increases the stability of the entire positioning system, but also makes the layout of the sensor on the helmet or the handle more simple.

Further, since the time interval of each scan is shorter, information such as the posture of the handle can be transmitted to the PC end at a faster frequency (once every 4 ms), and updated to the VR game in a timely manner.

When playing vr games or applications, it is inevitable that there will be various occlusions. In order to allow users to play in the room-level space, the lighthouse must be able to illuminate the helmet and handle at 360 degrees. Da Peng vr's solution is the same as htc, and it is also a dual base station. The working sequence of the dual base station is the same as that of the single base station. The only difference is that when the primary base station is working, the secondary base station is turned off, and vice versa.

The inherent positioning of Da Peng VR laser positioning is stable, and the need for fewer sensor points makes the positioning solution very suitable for the education industry and multiplayer online battles.

The online multiplayer battle is also an area where the Big VR Locator Edition excels.

For example, in the following scenarios, only one or two base stations can cover multiple Internet cafes and reduce costs.

In summary, compared with the infrared scheme, laser positioning has obvious advantages in the application of multi-person online in large space.

Product categories of Vozol, we are specialized electronic cigarette manufacturers from China, Vapes For Smoking, Vape Pen Kits, Vape Cartridge, Smoking Accessories,E-Cigarette suppliers/factory, wholesale high-quality products of Modern E-Cigarette R & D and manufacturing, we have the perfect

after-sales service and technical support. Look forward to your cooperation!

vozol disposable vape,puff bar vozol vape,vozol bar disposable vape kit,pod vozol bar vape,vozol vape pen

Ningbo Autrends International Trade Co.,Ltd. , https://www.supermosvape.com