Introduction to NPU and performance analysis of Kirin 970's NPU

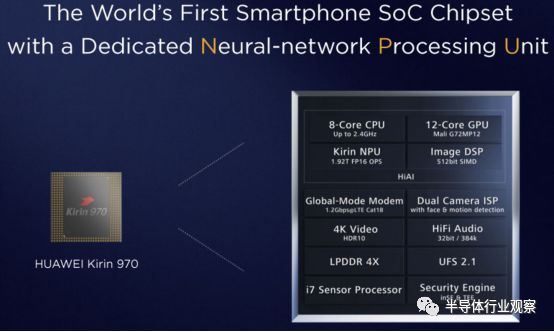

Last year, Huawei introduced the industry's first mobile chip Kirin 970 with integrated NPU. As the flagship of the new generation, the CPU on this SoC integrates 8 cores, 4 of which are high-performance ARM public version A73 architecture, the highest frequency is 2.4GHz (the Kirin 960 is 2.36GHz), and 4 are low-power ARM public version A53 architecture, the highest frequency 1.8GHz (Kirin 960 is 1.84GHz); GPU is integrated with ARM's latest Mali-G72 architecture.

In addition, in addition to the communication baseband, ISP, DSP, Codec and coprocessors required for traditional mobile handset SoCs, the Kirin 970 also integrates NPUs specifically tailored for deep learning, with FP16 performance of 1.92 TFLOP. Specifically, the NPU is 25 times that of the CPU and 6.25 times (25/4) of the GPU. The energy efficiency ratio is 50 times that of the CPU and 6.25 times (50/8) of the GPU. This is a killer thrown by Huawei for the hot artificial intelligence market.

All of the above are the words of Huawei. Let's talk about the true strength of Huawei's NPU chip. First, let's first take a look at the concept of NPU.

What is an NPU?To be precise, when we talk about the use of artificial intelligence in computing, more emphasis is on machine learning.

When we discuss the in-depth study of artificial intelligence at the hardware level, we are talking about the optimization and execution of convolutional neural networks for specialized hardware modules.

In explaining how convolutional neural networks work, the work we started in the 1980s has gone far beyond research, and its fundamental purpose is to try to simulate the behavior of human brain neurons.

Note that one of the key words here is "simulation", although so far no neural network can mimic the structure of the human brain from the hardware level.

However, there have been many theories in the academic field, especially in the field of neural networks. Over the past decade, a suite of software has been developed to simulate the entire process at the hardware level of the GPU.

For example, researchers continue to iterate and develop the model of CNN, which greatly improves the accuracy and efficiency of news.

Of course, the GPU is not the best hardware for running artificial intelligence, nor is it the only processor that can perform highly parallel operations.

With the continuous development of artificial intelligence, more and more companies hope to commercialize artificial intelligence in practical applications, which requires hardware to provide higher performance and higher efficiency.

Therefore, we have also seen the emergence of more professional processors, the architecture is for applications such as machine learning.

Google was the first company to announce the launch of such hardware, which launched the TPU in 2016. However, while such specialized hardware can achieve greater efficiency in terms of hardware and power in handling artificial intelligence, it also loses flexibility.

Google TPU chip and motherboard

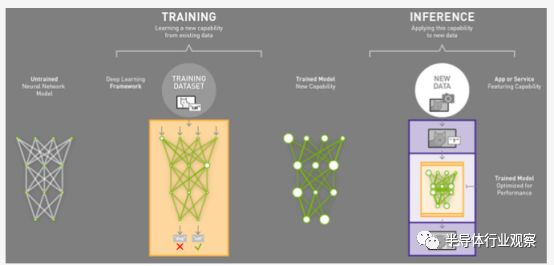

Among these specialized artificial intelligence processors, there are two main aspects that affect their work efficiency: there is a trained model, which mainly includes related data that the model may involve in future operations. In general, the training of the model is large-density, and it requires a large amount of training to achieve higher precision. That is to say, in actual operation, an effective neural network is much larger than the actual neural network used.

Therefore, there is a general idea that the main training of the model is done by a larger GPU server or a TPU cloud server.

Second, the operation of the neural network requires an execution model to achieve the entire process by continuously injecting new data and completing the calculation of the model. In general, we will enter the data, and then the model of the output result through the neural network model is called reasoning.

However, the actual reasoning process and the training process in the model are also very different for the calculation requirements.

Although both reasoning and training require high-density parallel computing, reasoning can be done with lower-precision calculations, while the computational performance required to execute the model part is lower, which means that the reasoning process can be cheaper. The hardware is coming up.

This feature in turn leads the industry to move more toward edge devices (user devices) because they provide higher performance efficiency and lower power consumption.

That is to say, if there is a trained model in the local device, the device can be used to perform the reasoning process without uploading data to the cloud server for data processing.

This process will alleviate possible delays, power consumption and bandwidth issues, while also avoiding privacy issues because the output data never leaves the user equipment.

As the neural network inference function continues to be implemented on the terminal device, research and selection of functions that can be implemented by different processors are also intensive.

CPUs, GPUs, and even DSPs can implement inference functions on end devices, but there is a huge efficiency gap between these processors. General-purpose processors are suitable for most jobs, but they are not designed to be used for large-scale parallel computing. GPUs and DSPs even perform better and there is huge room for improvement.

However, in addition, we have seen the emergence of a new processing accelerator, such as the NPU used in the Kirin 970.

Since such processing accelerators are newly emerging devices, so far, the industry has not a unified naming scheme. Huawei's Hess named a way, while Apple is named after another.

However, from a universal perspective, we can collectively refer to these processors as neural network IP.

IP for the Kirin 970 NPU is a Chinese IP provider named Cambrian. It is understood that the IP used by the NPU is optimized, rather than directly using existing IP. At the same time, Huawei also asked Cambricon to work with it to improve the IP, because in actual applications, the actual situation and calculations, sometimes there will still be some gaps.

However, what we need to understand is that we should avoid paying too much attention to the theoretical performance data of neural networks, because these data are not necessarily related to actual performance, and because of the limited understanding of neural network IP, the final result is not known.

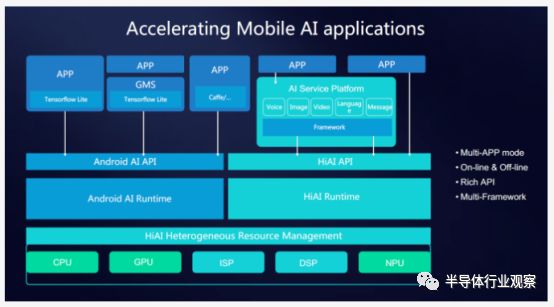

When using a hardware device other than the CPU to run the neural network, the first obstacle is to use the appropriate API to access the module.

Traditional SOC and IP providers have been able to provide dedicated APIs and SDKs for application development of neural networks using such hardware. The API provided by HiSilior can not only manage the CPU, but also manage the GPU and NPU. Although Hisilicon has not yet disclosed the API, it is understood that HiSilicon will develop with developers later this year.

Other vendors, such as Qualcomm, also provide SDKs to help program developers build neural networks based on hardware such as GPUs and DSPs. Of course, other IP vendors also offer their own specialized software development tools to correlate. Development.

However, APIs for specific vendors also have limitations. In the future, we need different vendors to provide a unified API for faster and easier development.

Google is currently working on this, and the company plans to introduce a module called NN API in Android 8.1.

Another problem to be aware of is that many of the current NN APIs can only support some functions, such as only supporting some functions of the NPU. If developers want to fully develop and utilize the performance of the hardware based on the NPU, Developers need to have a dedicated API to develop such hardware.

Kirin 970 NPU performance testIn order to complete this kind of development work, we also need a benchmark to test how much performance the API provided by different vendors can take advantage of the NPU.

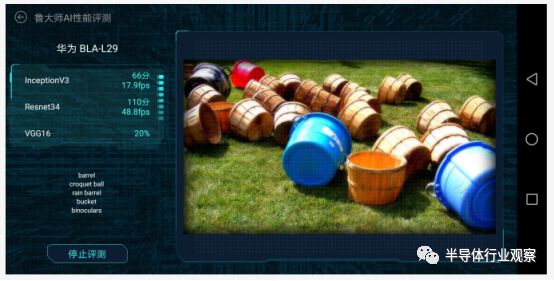

Unfortunately, at this stage, we still lack similar methods to achieve this benchmark. Currently, only one Chinese manufacturer has launched related software: Lu’s benchmark software, which is popular in China, recently launched an artificial intelligence-based Test framework for testing NPU and Qualcomm SNPE frameworks.

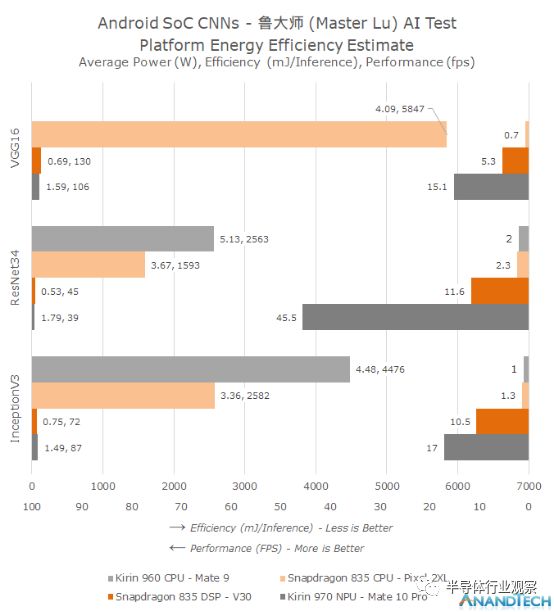

It is understood that the benchmark can now test three different neural networks, VGG16, InceptionV3 and ResNet34.

This type of software not only tests the performance of the relevant processor, but also gives relevant results. At the same time, the processor's processing power can be demonstrated in a graphical way from three dimensions: average power, efficiency and absolute performance.

From the graphical data presented by such software, we can observe the difference in performance of the processor, and how much difference between the CPU and the NPU in performing related operations.

When using the CPU for calculations, the CPU usually only calculates at a rate of 1-2 fps, and the required power consumption is also abnormally high. For example, when the CPUs of the Snapdragon 835 and the Kirin 960 are operating, they need to operate at a workload that exceeds the average load.

In comparison, Qualcomm's Hexagon DSP can achieve 5 to 8 times performance relative to the CPU.

The performance of Huawei's NPU is more obvious. Compared with ResNet34, NPU can achieve 4 times performance improvement.

It's not hard to find that the difference in performance between different processors is due to the different design of these processors and the different processor application scenarios.

Since the convolutional neural network requires a large amount of parallel computing during the operation, a specialized processor such as the Kirin NPU can often achieve higher performance in the execution process.

In terms of power consumption, we found that the NPU can achieve 50 times improvement compared to other processors, especially in the actual application of convolutional neural networks, this energy consumption is more obvious.

At the same time, we also found that Qualcomm's DSP can also achieve the same level of power consumption as Huawei NPU. This seems to indicate that the Hexagon 685 used in Qualcomm's Opteron 845 processor can increase performance by a factor of three.

Here, I want to complain about Google's Pixel 2: Because Pixel 2 lacks support for the SNPE framework, it is difficult to actually perform the CPU benchmark of the Snapdragon 835.

However, in a sense, this is a matter of course. After all, Google will introduce the NN API in Android 8.1. In the future, Google will promote the acceleration of the Android standard API on related processors.

However, on the other hand, this will also limit the ability of traditional mobile phone OEMs to develop.

This decision often limits the development of the ecosystem in the future, which is why we have not seen more mobile GPUs to carry out related convolutional neural network acceleration work.

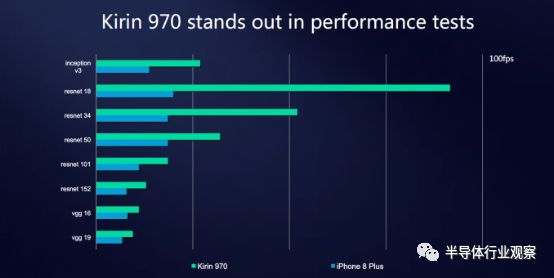

In addition, although the iPhone does not currently support the relevant benchmarks, we can also see some clues from the relevant data released by HiSilicon.

From some figures, we can see that the neural network IP provided by Apple has surpassed the Snapdragon 835 processor in terms of performance, but it still lags far behind HiSilicon's NPU. However, we cannot separately verify that these figures are really suitable for the relevant benchmark.

Of course, the most important question is what benefits can such a processor bring?

Haisi said that a more obvious example is that CNN uses the application processor to perform noise reduction processing, which can improve the accuracy of speech recognition from 80% to 92% under heavy traffic conditions.

In addition, in the camera application, Mate 10's camera can identify different scenes through the reasoning with the help of the NPU, and then intelligently optimize the camera settings based on the scene.

At the same time, the Microsoft translation program used in Mate 10 can also use the offline accelerated translation function of NPU, which is an application that impressed me.

In the picture application built into the mobile phone, it is also possible to intelligently identify the picture for classification.

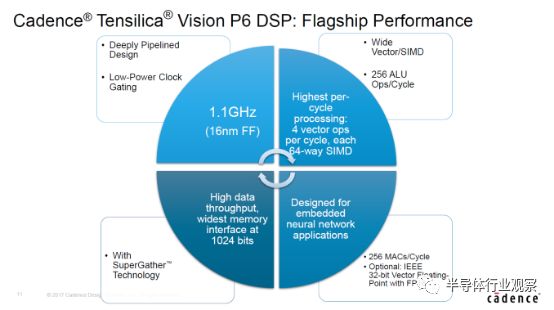

In addition to the NPU's ability to visually process convolutional neural networks, Cadence's Tensilica Vision P6 DSP and Qualcomm's Hexagon 680 DSP can achieve the same functionality, but are currently not open to end users.

However, this does not mean that Mate 10 with NPU can bring a decisive differentiated experience to end users. Similarly, the application of such neural networks in mobile phones does not appear to be the same killer application in the field of automobiles and security cameras. In addition, due to the restrictive problems of the ecosystem, we can only see related applications in Mate 10. Can we see in more scenarios, whether Huawei is willing to develop and develop together with developers is worth discussing Things, but Huawei's innovation in this area is still worthy of recognition.

As mentioned before, the application jointly developed by Huawei and Microsoft seems to be the most attractive application on Mate 10, so we can explore more on this basis.

At present, the application can intelligently identify traditional foreign texts and translate them. So, can AR applications be used in the future?

MediaTek showed us a related example of recognition at CES: Video conferencing encoders using neural networks can identify images and videos from CNN and feed them back to the encoder to improve the quality of the video.

In the future, it is conceivable that more and more devices will adopt this type of IP, and developers can more easily develop related applications.

Final thoughtIn this article, I don't want to emphasize how advanced the Kirin 970 is. I just hope to take this opportunity to show that there will be many exciting changes in the competition and development pattern of high-end Android smartphone processors in the future.

With the 10th anniversary of the iPhone smartphone ecosystem, we are seeing the emergence of more and more vertically integrated devices.

It's not that Apple must be the rule maker, but in the future, in a more mature ecosystem, companies need to be able to control their development route independently. Otherwise, mobile phone manufacturers will have a hard time distinguishing them from other vendors, let alone providing differentiated features for users or competing with other vendors.

Apple realized this very early. Huawei is by far the only one to be able to set up its current OEM.

At the same time, there are many quasi-independent manufacturers working on their own chips, which are designed with key components such as CPU and GPU from IP vendors.

Fundamentally speaking, Kirin 970 does not have much difference with the Snapdragon 835 in terms of CPU performance and power. The error is only reflected in the actual application of cortex-a73.

Considering that the CPU used by Xiaolong 820 is slightly different from Samsung's self-developed CPU, it is not obvious in practical applications, and Samsung has no plans to develop and integrate independent CPUs until now. Considering these, Huawei It makes sense to use an ARM CPU.

Qualcomm itself has a certain degree of self-control in the independent design of CPU and GPU, and has a big gap with other manufacturers.

Imagine that the leading NVIDIA on the desktop GPU has a 33% efficiency competitive advantage compared to its competitors. When this advantage is extended to 75-90%, this choice is self-evident.

In this case, vendors can compensate for efficiency and performance deficiencies by using larger GPUs that are hardly perceived by end users.

However, this is an unsustainable solution because it is eroding the manufacturer's gross margin.

In addition to the CPU and GPU and modem IP, the phone needs more components, so I won't go into it here.

For example, the Cadence Tensilica Vision P6 DSP used in the Kirin 970 does improve the performance of the camera, but it also needs to be supported from the software side.

NPU is an emerging IP that is still in its infancy. Does Kirin 970 have many competitors? not at all. Does this feature add competitiveness to the product? It does, but it may not be as big as it seems.

The development of the software ecosystem will indeed slow down the development of the mobile phone industry, but without the support of related hardware, many applications can only be achieved by software.

It will be inevitable that Huawei's strategy will be adopted in the whole industry in the future.

HiSilicon's NPU chip proves that HiSili as a chip design company can also design a processor that rivals Qualcomm and Samsung. However, the release time of HiSilicon does not follow the release rules of traditional Android mobile phone manufacturers, so we expect new processing to appear, surpassing Kirin 970 in terms of performance.

The reality is that Huawei is one of the only two OEM suppliers that can integrate chip design and terminal products (Editor's note: Samsung is also considered a Samsung, but Samsung seems to adopt Qualcomm's solution more), and is the only Android vendor. . In the past few years, this manufacturer has come a long way and experienced too many improvements. Most importantly, Huawei has always been able to put goals and execution goals together, and is firmly committed to the right direction of mobile business, which is the key reason for their success.

But for this Chinese manufacturer, the road ahead is still very long.

GALOCE Canister Load cells are an integral part of a weighbridge, and must act as durable mounting hardware to facilitate the harshest of outdoor environments. Weightron offer two models of stainless steel weighbridge Load Cell,which are based on the same mechanical envelope with key design features ensure optimum performance, precision and long-term durability.

Canister Load Cell,Tank Weighing Load Cells,Tank Load Cells,Tank Scales Load Cells

GALOCE (XI'AN) M&C TECHNOLOGY CO., LTD. , https://www.galoce-meas.com