Detailed analysis of the meaning, challenges, types, and applications of neural networks

Editor's note: Statsbot deep learning developer Jay Shah takes you on the neural network to learn about popular neural network types such as autoencoders, convolutional neural networks, and recurrent neural networks.

Today, people use neural networks to solve many business problems such as sales forecasting, customer research, data validation, and risk management. For example, in Statsbot, we apply neural networks to time series prediction, anomaly data detection, and natural language understanding.

In this article, we will explain what neural networks are, the primary challenges faced by beginners using neural networks, the types of popular neural networks and their applications. We will also describe how neural networks can be applied to different industries and sectors.

Neural network idea

Recently, the term "neural network" in the field of computer science has caused a lot of sensation and has attracted the attention of many people. However, what is the neural network, how does it work, is it really helpful?

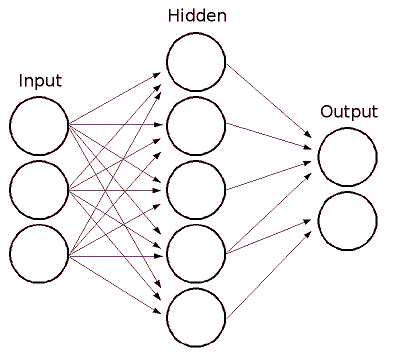

Essentially, a neural network consists of layers of computational units called neurons, which are interconnected. The neural network converts the data until the network can output the classification. Each neuron multiplies an initial value by a certain weight, adds it to other values ​​entering the same neuron, and adjusts the result based on the bias of the neuron, then normalizes the output using the activation function.

Iterative learning process

A key feature of neural networks is the iterative learning process, in which records (rows) are provided to the network one by one, each time the weight associated with the input values ​​is adjusted. This process often repeats after all the circumstances have been provided. During this learning phase, the network trains itself by adjusting the weights to predict the correct category labels for the input samples.

The advantages of neural networks include high tolerance to noise data and the ability to classify untrained patterns. The most popular neural network algorithm is the back propagation algorithm.

Once the network architecture is established for a particular application, the network can be trained. Initially, the initial weights (discussed in the next section) were chosen randomly. Then training (learning) begins.

The network processes the records in the "training set" one by one using the weights and functions in the hidden layer, and then compares the actual output with the expected output. The error propagates back in the system, the system adjusts the weight accordingly, and the adjusted weight is applied to the next record.

This process is repeated as the weight is adjusted. In the network training process, as the connection weights continue to improve, the same data set will be processed multiple times.

So where is it hard?

One of the challenges that beginners encounter when learning neural networks is to understand what is happening at each level of the network. We know that after training, each layer extracts a higher level feature of the data set (input) until the last layer determines what the input feature refers to. How is this done?

We can let the network make decisions, rather than specifying exactly what we want to enhance the network. For example, we simply pass in an arbitrary image or photo to the network and let the network analyze the image. Next, we choose a layer and ask the network to enhance what is detected at that layer. Each layer of the network handles features of different levels of abstraction, so the complexity of the features we generate depends on which layer we choose to enhance.

Popular neural network types and uses

In this neural network article for beginners, we will discuss automatic encoders, convolutional neural networks, and cyclic neural networks.

Automatic encoder

The automatic encoder is based on such an observation that random initialization is a bad idea, and pre-training each network layer based on the unsupervised learning algorithm can obtain better initial weights. An example of such an unsupervised algorithm is Deep Belief Networks. Recently, some studies have attempted to revive this field, such as the variational method using probabilistic autoencoders.

They are rarely used in practical applications. Recently, batch normalization allows for deeper networks, and we can learn from scratch to learn arbitrarily deep networks based on residuals. With proper dimensional and sparsity constraints, autoencoders can learn more interesting data projections than PCA or other basic techniques.

Let's take a look at two interesting practical applications of autoencoders:

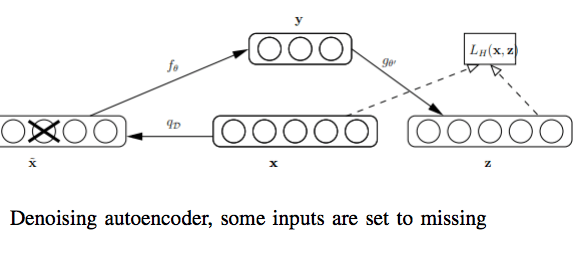

A denoising autoencoder based on a convolutional layer can efficiently remove noise.

Denoising auto-encoder, some inputs are set to be missing

In the above figure, the random destruction process randomly sets some inputs to zero, forcing the denoising autoencoder to predict missing (destroyed) values ​​for a subset of randomly selected missing patterns.

Dimensionality reduction of data visualization attempts to reduce dimensionality using principal component analysis (PCA) and t-distribution random neighbor embedding (t-SNE). Both methods are used in neural network training to increase the accuracy of model predictions. At the same time, the accuracy of MLP neural network prediction is highly dependent on the structure of the neural network, the preprocessing of the data, and the types of problems faced by the development network.

Convolutional neural network

The convolutional network takes its name from the "convolution" operation, and the main purpose of convolution is to extract the features of the input image. Convolution preserves the spatial relationship between pixels by learning the characteristics of the image based on the small pieces of input data. Here are some examples of successful application of convolutional networks:

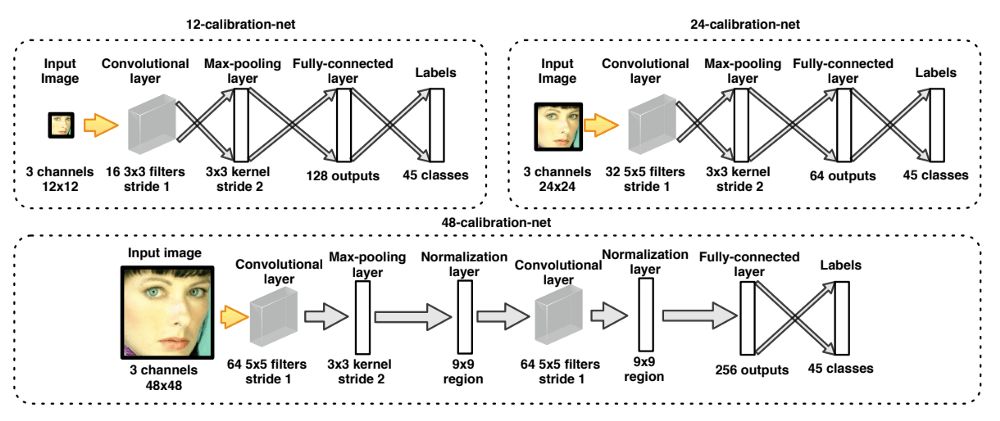

The face recognition study by Stevens Institute of Technology and Adobe uses a concatenated convolutional neural network to recognize faces at high speed. The decoder evaluates the input image at low resolution to quickly cull the area of ​​the face and then carefully process the key areas with higher resolution to accurately detect the face.

The cascade structure also introduces a calibration network to speed up the identification and improve the quality of the bounding box.

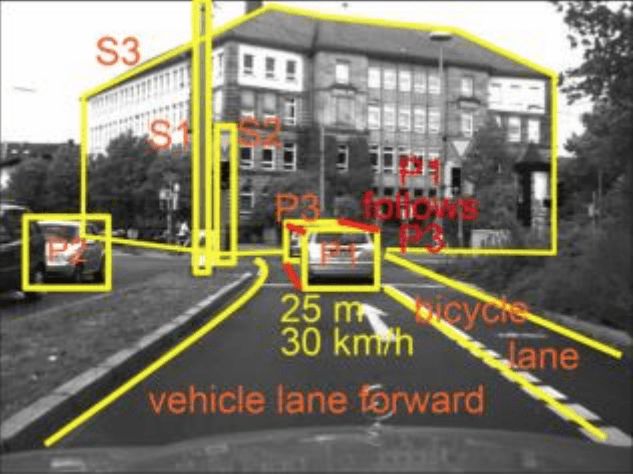

In autonomous vehicle projects, depth of field estimation is an important consideration for autonomous driving to ensure the safety of passengers and other vehicles. CNN in this area is used in projects such as NVIDIA's self-driving cars.

The CNN layer can be input through multi-parameter processing, so the CNN layer provides extremely high flexibility and variability. Subclasses of these networks also include deep belief networks. Traditionally, convolutional neural networks are used for image analysis and object recognition.

By the way, an interesting project uses CNN to drive a car in a game simulator and predict the driving direction.

Recurrent neural network

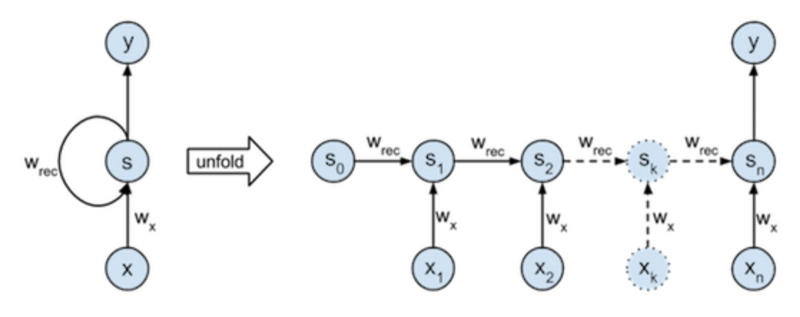

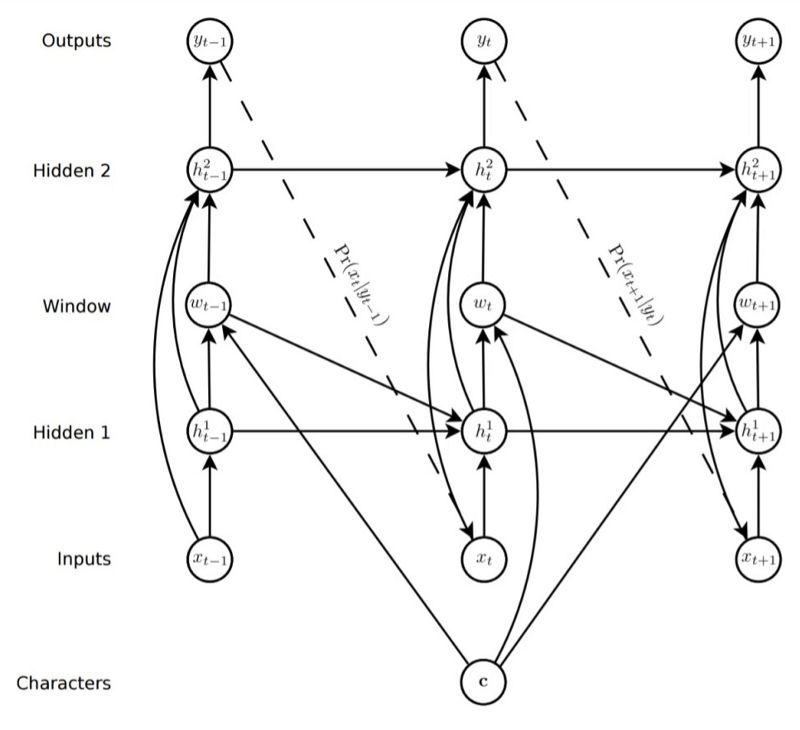

The RNN (Circular Neural Network) used for sequence generation is trained to process a step of the real data sequence and predict the next step. Here is a guide on how to implement the model.

Assuming that the prediction is probabilistic, iteratively samples from the output distribution of the network and then passes the sample to the next step as input, and the training network can generate a new sequence. In other words, let the network see its invention as real, just like a person is dreaming.

RNN can be used for language-driven image generation to generate handwriting based on a given text. To cope with this challenge, the soft window of the convolved text string is passed as an additional input to the predictive network. The parameters of the window are output while the network is predicting, such that the network dynamically determines the alignment of the text and pen position. Simply put, the network learns the next character to write.

Given a particular input, the trained neural network can generate the expected output. If we have a well-fitting network that models a sequence of known values, then we can use it to predict future results. Stock market forecasting is an obvious example.

Application of neural networks in different industries

Neural networks are widely used in real-world business issues such as sales forecasting, customer research, data validation, and risk management.

Marketing

Precision marketing involves market segmentation, which divides the market into different customer segments based on customer behavior.

Neural networks can segment customers based on basic characteristics, including population, economic status, location, purchase model, and attitude toward the product. Unsupervised neural networks can be automatically grouped based on the similarity of customer attributes, while supervisory neural networks can learn the boundaries between client partitions by training based on the characteristics of the same set of customers.

Sales

Neural networks have the ability to consider multiple variables simultaneously, such as market demand, customer income, population, and product prices. For supermarkets, forecasting sales is very helpful.

If two products are related for a period of time, such as a customer who purchased a printer will return to buy a new cartridge within three to four months, the retailer can use this information to contact the customer in time to reduce the customer from the competitor. The probability of purchasing a product.

Banking and finance

Neural networks have been successfully applied to derivative securities pricing and hedging, future price forecasts, exchange rate forecasts, and stock performance. Traditionally, such software is driven by statistical techniques. Today, however, neural networks are the underlying technology for driving decisions.

medicine

The research of neural networks in the field of medicine is becoming a trend. It is believed that in the next few years, neural networks will be widely used in biomedical systems. For the time being, most studies model parts of the human body and identify diseases through various scans.

in conclusion

Perhaps the neural network can give us some insight into the "simple questions" of cognition: how does the brain handle environmental simulation? How does the brain integrate information? However, the real question is, why and how these human treatments are accompanied by the inner experience of life, can the machine achieve such self-awareness?

This allows us to think about whether neural networks can help artists work – a new way of reorganizing visual concepts – perhaps even inspiring us to recognize the roots of the creative process we pass.

All in all, neural networks make computer systems more human, making them more useful. So the next time you think you might like to make your brain as reliable as a computer, think again – there is such a super neural network installed in your mind, you should be grateful!

I hope this introduction to neural networks for beginners can help you create your first neural network project.

Patch panels are commonly used in computer networking, recording studios, and radio and television.

We are professional Network patch panel manufacturer in China. Our patch panel includes voice type 25port voice patch panel and 50port voice patch panel, data type (cat.5e,cat.6, cat.6a) with 6/12/24/48port on option. We use good PCD board and IDC connectors (krone IDC, 110 IDC, Dual IDC for option) to ensure the characters for each product. Specially for 24port Cat5e.UTP patch panel ,we offer not only in flat configuration as normal but also offer angel patch panels configuration.

The patch panel is offered universal wiring both T568A and T568B. We will do the Fluke test before selling our network patch panel.

Fiber Optic Patch Panel, Blank Patch Panel, Cat6a Patch Panel, Cat5e Patch Panel, Patch Panel Wall Mount

NINGBO YULIANG TELECOM MUNICATIONS EQUIPMENT CO.,LTD. , https://www.yltelecom.com